Thank you, I figured it may be as simple to add the files to resources.

I can now instantiate the importer with new OnnxFrameworkImporter(), and loading the graph with .runImport prints a long string with what I presume is all the model parameters:

[_wrapped_model.backbone.fpn_lateral2.weight, _wrapped_model.backbone.fpn_lateral2.bias, _wrapped_model.backbone.fpn_output2.weight, _wrapped_model.backbone.fpn_output2.bias, _wrapped_model.backbone.fpn_lateral3.weight, _wrapped_model.backbone.fpn_lateral3.bias, _wrapped_model.backbone.fpn_output3.weight, _wrapped_model.backbone.fpn_output3.bias, _wrapped_model.backbone.fpn_lateral4.weight, _wrapped_model.backbone.fpn_lateral4.bias, _wrapped_model.backbone.fpn_output4.weight, _wrapped_model.backbone.fpn_output4.bias, _wrapped_model.backbone.fpn_lateral5.weight, _wrapped_model.backbone.fpn_lateral5.bias, _wrapped_model.backbone.fpn_output5.weight, _wrapped_model.backbone.fpn_output5.bias, _wrapped_model.proposal_generator.rpn_head.conv.weight, _wrapped_model.proposal_generator.rpn_head.conv.bias, _wrapped_model.proposal_generator.rpn_head.objectness_logits.weight, _wrapped_model.proposal_generator.rpn_head.objectness_logits.bias, _wrapped_model.proposal_generator.rpn_head.anchor_deltas.weight, _wrapped_model.proposal_generator.rpn_head.anchor_deltas.bias, _wrapped_model.roi_heads.box_head.fc1.weight, _wrapped_model.roi_heads.box_head.fc1.bias, _wrapped_model.roi_heads.box_head.fc2.weight, _wrapped_model.roi_heads.box_head.fc2.bias, _wrapped_model.roi_heads.box_predictor.cls_score.weight, _wrapped_model.roi_heads.box_predictor.cls_score.bias, _wrapped_model.roi_heads.box_predictor.bbox_pred.weight, _wrapped_model.roi_heads.box_predictor.bbox_pred.bias, _wrapped_model.roi_heads.mask_head.mask_fcn1.weight, _wrapped_model.roi_heads.mask_head.mask_fcn1.bias, _wrapped_model.roi_heads.mask_head.mask_fcn2.weight, _wrapped_model.roi_heads.mask_head.mask_fcn2.bias, _wrapped_model.roi_heads.mask_head.mask_fcn3.weight, _wrapped_model.roi_heads.mask_head.mask_fcn3.bias, _wrapped_model.roi_heads.mask_head.mask_fcn4.weight, _wrapped_model.roi_heads.mask_head.mask_fcn4.bias, _wrapped_model.roi_heads.mask_head.deconv.weight, _wrapped_model.roi_heads.mask_head.deconv.bias, _wrapped_model.roi_heads.mask_head.predictor.weight, _wrapped_model.roi_heads.mask_head.predictor.bias, 1020, 1021, 1023, 1024, 1026, 1027, 1029, 1030, 1032, 1033, 1035, 1036, 1038, 1039, 1041, 1042, 1044, 1045, 1047, 1048, 1050, 1051, 1053, 1054, 1056, 1057, 1059, 1060, 1062, 1063, 1065, 1066, 1068, 1069, 1071, 1072, 1074, 1075, 1077, 1078, 1080, 1081, 1083, 1084, 1086, 1087, 1089, 1090, 1092, 1093, 1095, 1096, 1098, 1099, 1101, 1102, 1104, 1105, 1107, 1108, 1110, 1111, 1113, 1114, 1116, 1117, 1119, 1120, 1122, 1123, 1125, 1126, 1128, 1129, 1131, 1132, 1134, 1135, 1137, 1138, 1140, 1141, 1143, 1144, 1146, 1147, 1149, 1150, 1152, 1153, 1155, 1156, 1158, 1159, 1161, 1162, 1164, 1165, 1167, 1168, 1170, 1171, 1173, 1174, 1176, 1177, 1179, 1180, 1182, 1183, 1185, 1186, 1188, 1189, 1191, 1192, 1194, 1195, 1197, 1198, 1200, 1201, 1203, 1204, 1206, 1207, 1209, 1210, 1212, 1213, 1215, 1216, 1218, 1219, 1221, 1222, 1224, 1225, 1227, 1228, 1230, 1231, 1233, 1234, 1236, 1237, 1239, 1240, 1242, 1243, 1245, 1246, 1248, 1249, 1251, 1252, 1254, 1255, 1257, 1258, 1260, 1261, 1263, 1264, 1266, 1267, 1269, 1270, 1272, 1273, 1275, 1276, 1278, 1279, 1281, 1282, 1284, 1285, 1287, 1288, 1290, 1291, 1293, 1294, 1296, 1297, 1299, 1300, 1302, 1303, 1305, 1306, 1308, 1309, 1311, 1312, 1314, 1315, 1317, 1318, 1320, 1321, 1323, 1324, 1326, 1327, 1329, 1330]

but then throws exception:

Exception in thread "main" java.lang.NullPointerException

at org.nd4j.samediff.frameworkimport.onnx.ir.OnnxIRGraph.nodeList(OnnxIRGraph.kt:123)

at org.nd4j.samediff.frameworkimport.onnx.ir.OnnxIRGraph.<init>(OnnxIRGraph.kt:88)

at org.nd4j.samediff.frameworkimport.onnx.importer.OnnxFrameworkImporter.runImport(OnnxFrameworkImporter.kt:49)

at TestImportONNX$.delayedEndpoint$TestImportONNX$1(TestImportONNX.scala:13)

...

presumably because I am providing Collections.emptyMap() as input with the .runImport function, as shown in the model-import-framework guide.

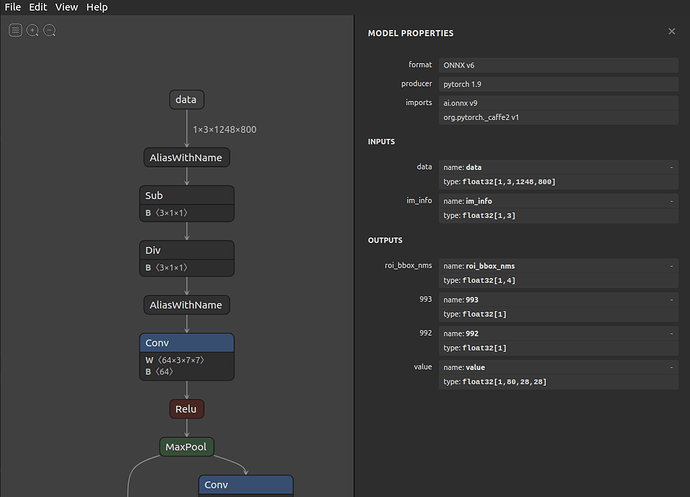

I haven’t delved so deep into dl4j before – could you please advise on how to input image formats to the model?